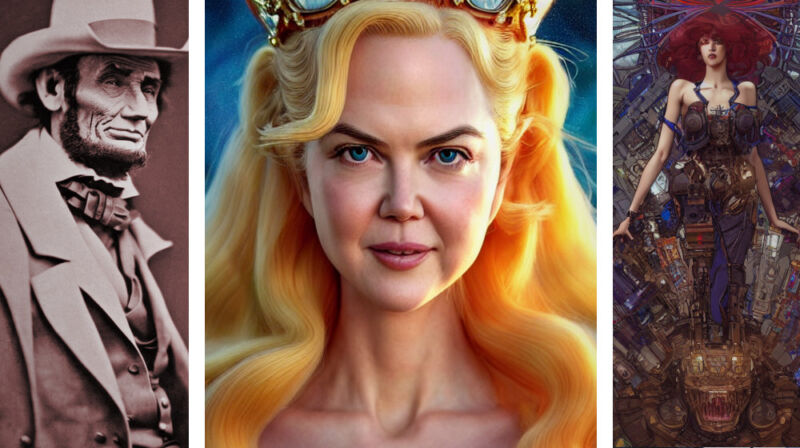

Enlarge / Did you know that Abraham Lincoln was a cowboy? Stable Diffusion does. (credit: Benj Edwards / Stable Diffusion)

AI image generation is here in a big way. A newly released open source image synthesis model called Stable Diffusion allows anyone with a PC and a decent GPU to conjure up almost any visual reality they can imagine. It can imitate virtually any visual style, and if you feed it a descriptive phrase, the results appear on your screen like magic.

Some artists are delighted by the prospect, others aren't happy about it, and society at large still seems largely unaware of the rapidly evolving tech revolution taking place through communities on Twitter, Discord, and Github. Image synthesis arguably brings implications as big as the invention of the camera—or perhaps the creation of visual art itself. Even our sense of history might be at stake, depending on how things shake out. Either way, Stable Diffusion is leading a new wave of deep learning creative tools that are poised to revolutionize the creation of visual media.

The rise of deep learning image synthesis

Stable Diffusion is the brainchild of Emad Mostaque, a London-based former hedge fund manager whose aim is to bring novel applications of deep learning to the masses through his company, Stability AI. But the roots of modern image synthesis date back to 2014, and Stable Diffusion wasn't the first image synthesis model (ISM) to make waves this year.

Read 21 remaining paragraphs | Comments

https://ift.tt/H620179

Comments